The Rockchip RK3568 features an integrated NPU module, delivering performance of up to 1 TOPS. RKNN is the model type used in the Rockchip NPU platform. This article describes how to perform RKNN model inference on the Runhe DAYU200 RK3568 development board using the OpenHarmony 4.0 Release system. The team from the Embedded Laboratory (Hu Yuhao, Zhao Sirong, Liu Yan, and Xie Guoqi) successfully implemented OpenHarmony for RKNN, enabling support for the Rockchip NPU and RKNN model inference on the OpenHarmony OS, which was successfully validated on the Runhe DAYU200 development board.

Overview

OpenHarmony:

OpenHarmony is an open-source operating system managed and promoted by the OpenAtom Foundation, primarily developed by Huawei. It aims to provide a unified platform for Internet of Things (IoT) devices, supporting a wide range of device types, from smart homes to wearables, automotive systems, and industrial IoT. OpenHarmony employs a distributed architecture, facilitating seamless device connectivity and interoperability, allowing users to control multiple terminals through a single device for a consistent service and application experience. As an open-source project, OpenHarmony offers open-source code and a developer ecosystem, ensuring high performance, low power consumption, and comprehensive security protection, making it an ideal platform for building interconnected smart device ecosystems. The OpenHarmony 4.0 Release version was launched in October 2023.

RKNN:

RKNN (Rockchip Neural Network) is a deep learning framework and toolchain developed by Rockchip, specifically designed for deploying and optimizing neural network models on its system-on-chip (SoC) solutions. RKNN provides a complete set of tools for model conversion, quantization, optimization, and inference, supporting various popular deep learning frameworks including TensorFlow, Caffe, and ONNX. With RKNN, developers can fully utilize the computational power of Rockchip's NPU (Neural Processing Unit) to implement efficient AI applications on edge and embedded devices.

DAYU200:

The DAYU200 is an OpenHarmony-rich device development board launched by Runhe Software. Based on the Rockchip RK3568, it integrates a dual-core architecture GPU along with a high-performance NPU. It features a quad-core 64-bit Cortex-A55 processor running at 2.0 GHz and supports functionalities such as Bluetooth, Wi-Fi, audio, video, and camera.

DAYU200 Development Board

PC Environment Setup

Local Environment: Windows 10/11, DAYU200 RK3568 Development Board

- Installation of Flashing Tools

Refer to the official tutorial:

https://wiki.t-firefly.com/zh_CN/ROC-RK3568-PC/Windows_upgrade_firmware.html

USB Driver:

https://www.t-firefly.com/doc/download/107.html#other_432

RKDevTool Flashing Tool:

https://www.t-firefly.com/doc/download/107.html#other_431

- Installation of hdc Tool

Refer to the tutorial:

https://forums.openharmony.cn/forum.php?mod=viewthread&tid=1458

Download link for installation package:

http://ci.openharmony.cn/workbench/cicd/dailybuild/dailylist

Find the "Pipeline Name" as ohos-sdk-full or ohos-sdk-public, click the 'Download Link' and select 'Full Package'.

Porting RKNPU Driver to OpenHarmony Kernel

1. Obtain RKNPU Kernel Driver Source Code

The driver source code can be found at: https://github.com/rockchip-linux/kernel, select the 5.10 branch, where the NPU driver is located in: drivers/rknpu. Alternatively, use the 5.10 kernel source code from manufacturers like Firefly or Feilin (recommended) which provides the RKNPU driver.

2. Set Up Compilation Environment & Driver Porting

Reference:

https://forums.openharmony.cn/forum.php?mod=viewthread&tid=897

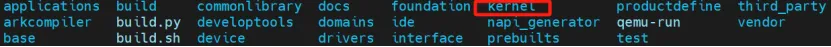

Set up the OpenHarmony compilation environment, suggesting at least 150G of disk space and over 12G of memory. After setup, the directory structure should look as follows:

First, modify the file

//kernel/linux/config/linux-5.10/rk3568/arch/arm64_defconfig

to add the CONFIG options for RKNPU as follows:

CONFIG_ROCKCHIP_RKNPU=y

CONFIG_ROCKCHIP_RKNPU_DEBUG_FS=y

CONFIG_ROCKCHIP_RKNPU_DRM_GEM=y

Then, compile the kernel separately:

sudo ./build.sh --product-name rk3568 --build-target kernel

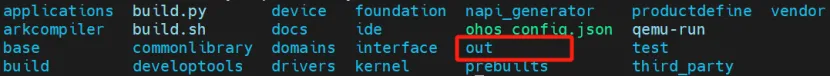

Upon completion, the directory will appear as follows:

Next, port the driver code from drivers/rknpu to

out/kernel/src_tmp/linux-5.10/drivers. In addition to drivers/rknpu, you must also modify the following related functions accordingly:

drivers/iommu/rockchip-iommu.c

include/soc/rockchip/rockchip_iommu.h

drivers/soc/rockchip/rockchip_opp_select.c

include/soc/rockchip/rockchip_opp_select.h

In these four files and also modify the Makefile and Kconfig in the drivers directory by adding the following:

# Makefile

obj-$(CONFIG_ROCKCHIP_RKNPU) += rknpu/

# Kconfig

source "drivers/rknpu/Kconfig"

Review the device tree for rk3568, adding the corresponding NPU node information (refer to the device tree of other rk3568 motherboards in the 5.10 version kernel for guidance), primarily adding the npu node under the pmu node's power-controller:

# Kconfig

source "drivers/rknpu/Kconfig"

pmu: power-management@fdd90000 {

...

power: power-controller {

...

/* These power domains are grouped by VD_NPU */

pd_npu@RK3568_PD_NPU {

reg = <RK3568_PD_NPU>;

clocks = <&cru ACLK_NPU_PRE>, <&cru HCLK_NPU_PRE>, <&cru PCLK_NPU_PRE>;

pm_qos = <&qos_npu>;

};

...

};

...

};

Upon successful compilation, the complete images can be found in

//out/rk3568/packages/phone/images.

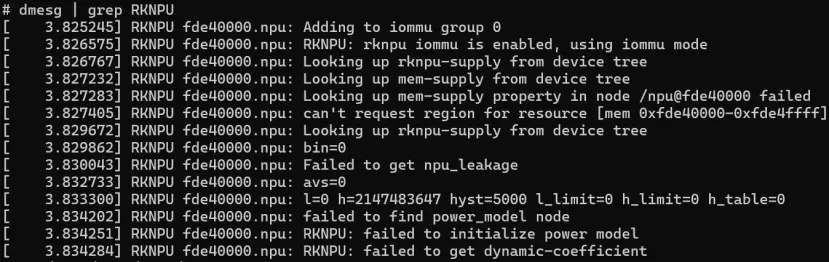

After flashing the image to the DAYU200 board using RKDevTool, connect to the mainboard via the command line on the PC with the command:

dmesg | grep RKNPU

You should see the following output:

This indicates that the kernel driver has been successfully ported.

Demonstrating Model Inference Performance

Running Environment: DAYU200 Development Board

Compiling the Inference Demo:

Utilize OpenHarmony's LLVM toolchain for compiling the model inference program; the LLVM toolchain cross-compiler can be found at

//prebuilts/clang/ohos/linux-x86_64/llvm/bin/clang.

After compilation, use the hdc tool to transfer the compiled executable file along with the linked dynamic libraries to the DAYU200 mainboard:

hdc file send PC路径 主板路径

# For example:

hdc file send d:\ohtest /data/

If there is an issue with sending files, it usually occurs due to the selected mainboard path being read-only. On the PC side, run:

hdc shell mount -o rw,remount

# Or enter the OpenHarmony shell with hdc shell and run:

mount -o rw,remount /

Performing Inference on OpenHarmony:

# First, switch to the mainboard command line:

hdc shell

# Navigate to the directory where the dynamic libraries are located and move them to /lib or /lib64:

mv xxx.so /lib/

# Execute the demo file:

chmod +x ./demo

./demo

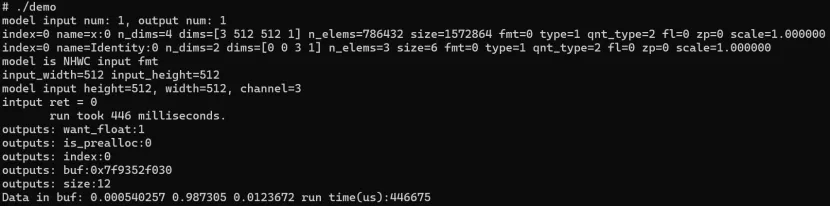

The inference results are as follows:

Inference completed successfully.

Conclusion

The Embedded Laboratory at Hunan University successfully deployed the RKNN model on the OpenHarmony system using the RKNN, OpenHarmony 4.0 Release, and the DAYU200 RK3568 development board. This involved adding kernel drivers, modifying framework configuration files, macro definitions, API interfaces, and creating device trees. The achievement highlights the robust potential of RKNN and OpenHarmony in edge computing, particularly for efficient applications on resource-constrained embedded devices, laying a foundation for smart device development in the fields of IoT and edge computing.